Summary: A semi-automated, artificial-intelligence based, interactive method for selecting a structure in a medical image, that requires only limited input from the users.

What

Segmentation is used extensively in medical imaging to highlight specific structures (e.g. an organ, a blood vessel or a tumour) and locations that should be analysed more closely. Researchers at King’s have developed a segmentation technique termed “Scribble”, which uses machine learning to create a first segmentation of a medical image. Users interact by drawing short lines on a display (scribbles) to confirm the first segmentation outcome or identify incorrect segmentation. An artificial intelligence algorithm then produces an optimised segmentation of the medical images being assessed. The original algorithm was trained on medical images of the placenta and of brain tumours.

Why

Segmentation of anatomical structures is an important task for many medical image processing applications, such as. diagnosis, anatomical modelling, or surgical planning. Automatic segmentation methods rarely achieve sufficiently accurate and robust results, due to poor image quality (with noise, artefacts and low contrast), variations among patients, pathologies, and variability between acquisition techniques. Interactive segmentation methods, which take advantage of a user's knowledge of anatomy, are widely used for higher accuracy and robustness, but are dependent on the skills of the user and require a greater burden, in terms of processing times and number of images that can be processed.

Benefits

The proposed segmentation method helps to integrate user interactions with artificial intelligence to obtain accurate and robust segmentation of medical images, while at the same time making the interactive framework more efficient with a minimal number of user interactions.

Opportunity

The technology is protected by a US patent and a European patent, and is available for licensing. Suitable commercial partners are sought to bring the algorithm to the market.

The Science

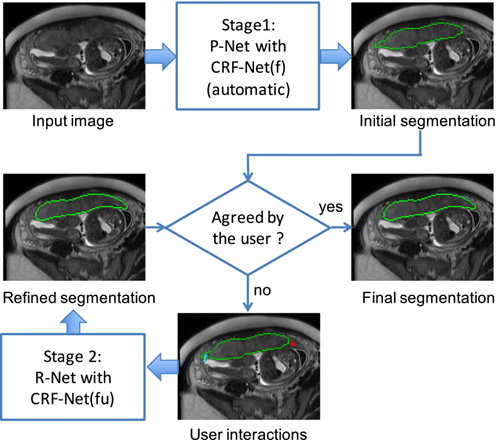

A first automatic segmentation of a medical image is obtained via a first machine learning algorithm (in Fig. 1 a P-NET convolutional neural network). The technology then uses “scribbles” drawn by a user on the first segmentation to compute a geodesic distance map. Specifically, the scribbles are drawn on mis-segmented areas for refinement (represented by red and light blue lines under the user-interaction block in Fig. 1). A further machine learning algorithm (in Fig. 1, a R-NET convolutional neural network) uses the computed geodesic distance map to update the segmentation on the medica image.

Figure: Overview of the proposed interactive segmentation framework with two stages. Stage 1: P-Net automatically proposes an initial segmentation. Stage 2: R-Net refines the segmentation with user interactions (scribbles) indicating mis-segmentations. CRF-Net(f) is a back-propagatable Conditional Random Field (CRF) that uses freeform pairwise potentials. CRF-Net(fu) differs from CRF-Net(f) as it employs the user interactions (scribbles) as hard constraints.

Patent Status